After an extended break, here is the first post of 2023. Two tech related issues are presented here this month; Fintech in Islamic banking and the impact of artificial intelligence (AI) in education. Two topics that occupied me intellectually over the last few decades, as an entrepreneur (developing apps in startup companies), academic (doing research) and as an educator in PNG and UAE high-ed sector

Fintech has been around for a while and those of us who are doing research in this space are talking about its move towards a third phase of its evolution. Technology is now firmly embedded into existing financial frameworks, the attention is turning to the advantages blockchain could offer in improving the way different financial institutions operate, from operational efficiency to cost savings. In fact I am attending the 1st ACE-SIP Summer School which will be held in Melbourne Australia from 2nd to 3rd Feb, 2023, which will feature leaders in the blockchain domain and leaders in the Asia Pacific blockchain and Algorand ecosystem. Will share more on this soon.

However as in many other technological innovations, the uptake of some financial innovations in the Muslim community was a bit slow. Certain segments of the Muslim investors and consumers have yet been unable to take full advantage of 21st century banking. However, I feel that an area primed for digital disruption is Islamic finance is upon us.

According to Refinitiv's Islamic Finance Development Indicator*, the Islamic finance sector is projected to reach $4.9tn (£3.7tn) in 2025. S&P Global Ratings also projects the industry to grow by 10 to 12 per cent over 2021 and 2022. And the UK is on a mission to become a global hub for Sharia-compliant finance. It is important for Muslim financial professionals to understand more about Fintech principles as well as its current and future impact on savings and investment.

Having built a Shariah-compliant global finance industry now worth US$3 trillion, Islamic legal experts are now grappling with the question of whether cryptocurrencies are permissible for the world’s 1.8 billion Muslims. Just how are religious laws from the 7th century adapted to meet the present-day needs and economic aspirations of believers? How do Islamic jurists decide what is halal, and what happens when there’s disagreement? And how will cryptocurrencies and other emerging technologies fit into the future of Islamic finance?

Early last year I listened to a podcast that I want to share with you. Dr Ryan Calder, who researches the social impacts of Shariah law, and Hassan Jivraj, Islamic finance journalist, probe these issues with presenter Ali Moore. If you want to listen the podcast, click on https://player.whooshkaa.com/episode?id=984998.

And those of you who want to read it or share with others, I have uploaded the document here: https://ayuub.org/wp-content/uploads/2023/01/IslamicFinance.pdf. and This podcast was recorded on the 8th of March 2022. Producers were Kelvin Param and Eric van Bemmel. Ear to Asia is licensed under Creative Commons, copyright 2022, the University of Melbourne.

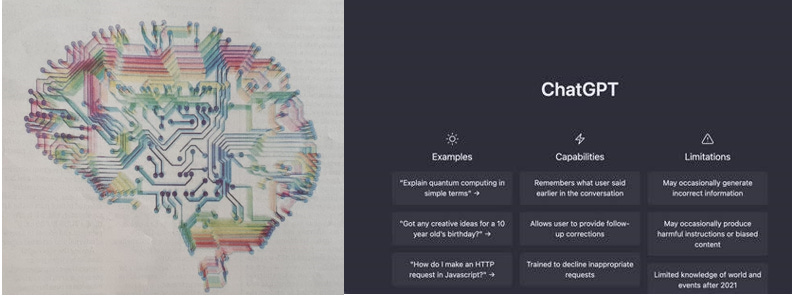

Let me digress and move onto the appearance of ChatGPT on November 30, 2022. Millions subscribed within weeks and AI has transformed itself from a steady stream of research and development over a period of half a century, to a gushing fire hydrant of technological innovation that, depending on whom you ask, suddenly promises to revolutionise and democratise entire fields of human endeavour, or threatens to bankrupt them.

I was meeting friends at Standford University and in the Silicon Valley vicinity in the last week of December 2022, and it was hard to avoid ChatGPT in any of our meetings. OpenAI – the company behind ChatGPT and which has a strategic alliance with Microsoft – did something similar to train its large language model (LLM) GPT-3 not to parrot hateful or harmful content in its responses to prompts. My friends who work or do tech research were blase about this latest inovation and they pointed out to me that LLMs with names like GPT (Generative Pre-trained Transformer) from Elon Musk’s OpenAI in 2018, ‘‘BERT’’ (Bidirectional Encoder Representations from Transformers) from Google in 2018, and RoBERTa from Facebook in 2019 have been released at a steady pace, typically with open-source licensing that allowed competing researchers to learn from each other.

Somehow, I felt and still do feel that ChapGT is a game changer. LLMs trace their roots back to the natural language processing (NLP) research in the 1950s and 1960s, but the progress in NLP began to shift from the ‘‘gradual’’ phase to the‘‘sudden’’ phase in 2017, when a team of researchers at Google published a paper called ‘‘Attention is all you need’’, revolutionising the field and giving rise to a new breed of machine learning.

The paper proposed a way of analysing text, known as a ‘‘transformer’’, that rather than just analyse a word in the context of the word that came before it, could look at an entire input sequence (such as a sentence or a paragraph) and weigh up which parts of the sequence were most relevant to the output the NLP engine was asked to generate. Suddenly, the model could create a correlation between words at the beginning of a sentence, and words in the middle or at the end, vastly improving the model’s performance.

In the Australian Financial Review of 1/24/2023, John Davidson asked ‘IS CHATGPT MAGICAL OR THE APOCALYPSE?’, a question that attracted my attention, and the following section is a summary of his article.

‘‘Knowledge work is now far more under threat than it ever has been in history, and we’re soon going to see a lot of change in what our workforces look like very soon,’’ he says. It’s not a bad thing. ‘‘Things change and jobs change and the nature of work changes, and we as humans roll on,’’ he says. The big question is, where are we rolling? ChatGPT is a LLM machine-learning chatbot that, according to the chatbot itself, was created by analysing billions of sentences of text taken from the internet. The analysis built a statistical model that’s able to create human-like conversation by predicting which words are most likely to follow a given prompt (such as the question ‘‘how does ChatGPTwork?’’), and which word is most likely to follow or precede the word before or after it.

In an effort to build a more ethical version of GPT-3 (the version that became known as GPT-3.5, which is used by the ChatGPT chatbot) OpenAI hired humans to painstakingly provide the LLM with the mathematical equivalent of penalties and treats, whenever it did or did not come up with harmful or hateful responses.

I guess what we need, as an example of better use of this technology, is to creat a machine learning model that could help electricity providers lower the cost of maintaining powerlines, etc. I read the Unleash live initiative which uses a generative AI to create millions of artificial photographs of cracked electrical insulators, and then used those images to repeatedly train a computer vision system until it could spot a crack in an insulator in a real photo. This to me is what is exciting about the use of technology for the betterment of society.

At the moment, ChapGPT can trawl through countless hours of imagery taken from drones flying close to power-lines, and automatically identify problems that humans should look into. OpenAI – the company behind ChatGPT and which has a strategic alliance with Microsoft also train its LLM GPT-3 not to parrot hateful or harmful content in its responses to prompts. In an effort to build a more ethical version of GPT-3 (the version that became known as GPT-3.5, which is used by the ChatGPT chatbot) OpenAI hired humans to painstakingly provide the LLM with the mathematical equivalent of penalties and treats, whenever it did or did not come up with harmful or hateful responses.

I may rapidly add that the ethics of OpenAI’s method for creating an ethical AI have since been questioned, however. A recent investigation by Time revealed that OpenAI hired an outsourcer that used low-paid workers in Kenya to teach GPT-3 how to identify toxic content, and avoid reproducing that content in its outputs. The toxic content the workers had to deal with ‘‘described situations in graphic detail like child sexual abuse, bestiality, murder, suicide, torture, self harm, and incest,’’ Time reported. Here is the article: https://time.com/6247678/openai-chatgpt-kenya-workers/

To conclude technology is a tool that we humans have invented with the assumption that we have a control on its usage. AI enabled Fintech and ChatGPT are challenging that assumption. That is scary notion as we ask ourselves if we want to place our trust in knowledge and financial decision synthesised by AI. So far the answer from many is no, and some including I lean towards yes. And you?

* https://www.refinitiv.com/perspectives/market-insights/how-is-the-islamic-finance-development-indicator-embracing-industry-change/